The team that hacked Amazon Echo & other smart speakers using a laser pointer continue to investigate why MEMS microphones respond to sound.

Think about a hacking into an Amazon Alexa device using a laser beam, & then doing some online shopping using that person account!

This is a situation presented by a research group who are exploring why digital home assistants, & other sensing systems that use sound commands to perform functions can be hacked by light.

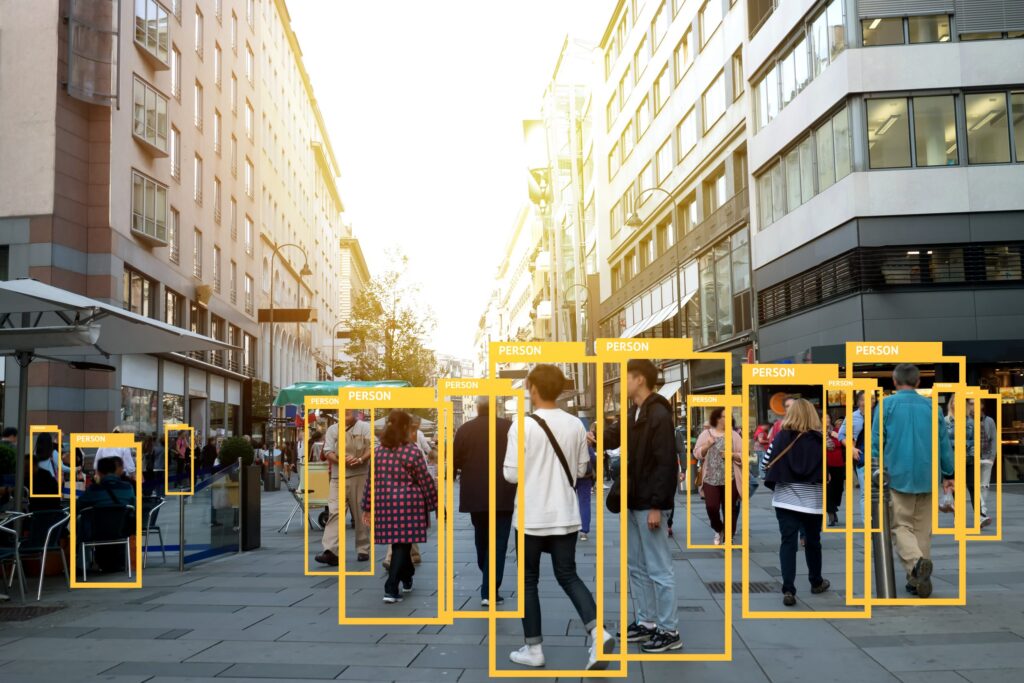

Smart Speakers

The same team that in 2020 mounted a signal-injection attack against a range of smart speakers just by using a laser pointer are still unwrapping the mystery of why the micro-electro-mechanical systems (MEMS) microphones in the products turn the light signals into sound.

Researchers said then that they were able to launch ‘inaudible commands’ by shining lasers from as far as 360ft. at the microphones on various popular voice assistants, including Amazon Alexa, Apple Siri, Facebook Portal, & Google Assistant.

Electrical Signal

“By modulating an electrical signal in the intensity of a light beam, attackers can trick microphones into producing electrical signals as if they are receiving genuine audio,” said researchers at the time.

This team– Sara Rampazzi, Assistant Professor at the University of Florida; & Benjamin Cyr & Daniel Genkin, a PhD student & an Assistant Professor, respectively, at the University of Michigan has expanded these light-based attacks beyond the digital assistants into other aspects of the connected home.

Digital Assistants

They widened their research to reveal how light can be used to manipulate a wider range of digital assistants including Amazon Echo 3, but also sensing systems found in medical devices, autonomous vehicles, industrial systems & even space systems.

Researchers also delved into how the devices connected to voice-activated assistants e.g. smart-locks, home switches & even cars also fail under common security vulnerabilities that can make these attacks more dangerous.

Home Devices

The report shows how using a digital assistant as the way in can let attackers take control of other home devices. When an attacker takes control of a digital assistant, he or she can use any device connected to it that also responds to voice commands.

These attacks can become even more complex, if these devices are connected to other elements of a smart home, e.g. smart door locks, garage doors, computers & even cars, they commented.

User Authentication

“User authentication on these devices is often lacking, allowing the attacker to use light-injected voice commands to unlock the target’s Smartlock-protected front doors, open garage doors, shop on e-commerce websites at the target’s expense, or even unlock & start various vehicles connected to the target’s Google account (e.g., Tesla & Ford),” researchers wrote.

The team plans to present the evolution of their research at Black Hat Europe on Dec. 10, though they acknowledge they still aren’t entirely sure why the light-based attack works, Cyr said in a report published on Dark Reading.

“There’s still some mystery around the physical causality on how it’s working,” he told the publication. “We’re investigating that more in-depth.”

Microphones

The attack that researchers outlined in 2020 used the design smart assistants’ microphones — the latest generation of Amazon Echo, Apple Siri, Facebook Portal & Google Home & was dubbed “light commands.”

Researchers focused on the MEMs microphones, which work by converting sound (voice commands) into electrical signals. However, the team said that they were able to launch inaudible commands by shining lasers — from as far as 110 meters, or 360 feet — at the microphones.

Mitigations

The team does offer some mitigations for these attacks from both software and hardware perspectives. On the software side, users can add an extra layer of authentication on devices to “somewhat” prevent attacks, although usability can suffer, researchers suggested.

In terms of hardware, reducing the amount of light that reaches the microphones by using a barrier or diffracting film to physically block straight light beams — allowing soundwaves to detour around the obstacle — could help mitigate attacks, they concluded.